This is a post from a couple of months I had drafted but am finally getting around to releasing. Hope you enjoy!

Project-based learning

With any discipline that requires a hand-on approach, engineers and scientists can’t really learn much from reading alone. Rather, through learning by doing, professionals can gain not only an intuition, but also experience with techniques they will carry with them into more advanced programs. Here are a couple of projects I worked on while reading the book, as well as projects inspired by the concepts it teaches.

Example Projects

I’ve recently been very interested in Generative networks and how computers can use latent features to generate synthetic images, speech, text, and so much more.

Click the toggles below to see some sample exercises I was able to work on while diving into this book:

Vanilla Autoencoders

I used vanilla autoencoders to generate image reconstructions using the Fashion MNIST dataset.

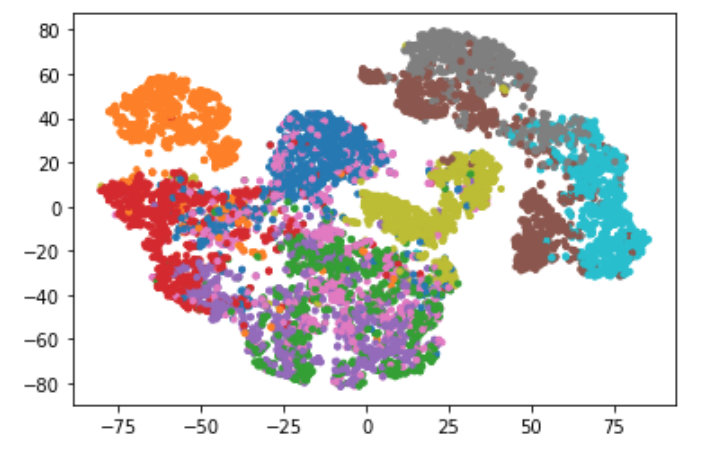

As you can see from the plot above, the model learns latent, low-dimensional encodings through the neural

network. I use the TSNE algorithm to show plot the first two components of these encodings. As you can see, there are

10 clear clusters in the dataset, and this correlates with the presence of 10 clothing classes.

As you can see from the plot above, the model learns latent, low-dimensional encodings through the neural

network. I use the TSNE algorithm to show plot the first two components of these encodings. As you can see, there are

10 clear clusters in the dataset, and this correlates with the presence of 10 clothing classes.

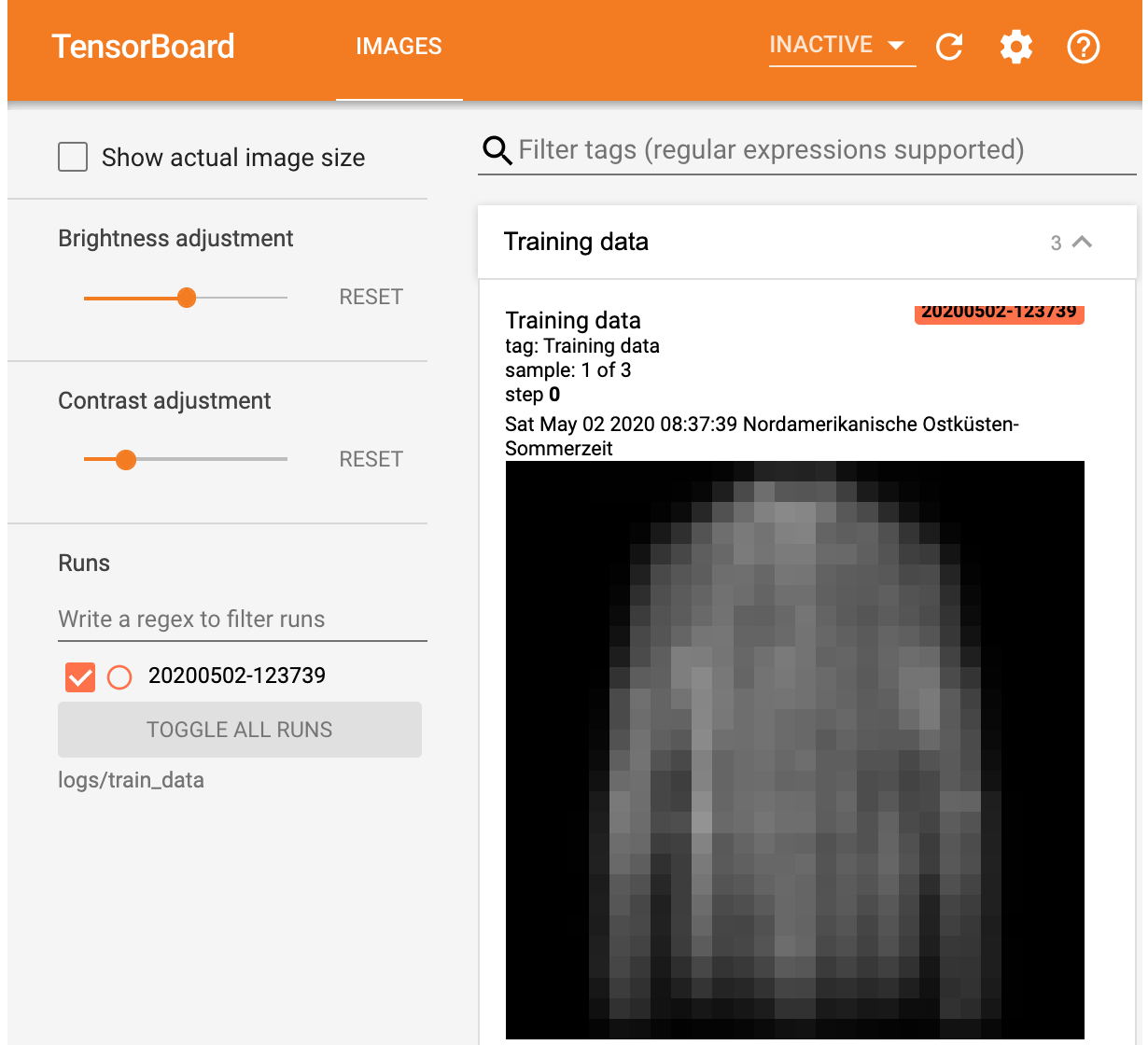

Above, you can see some sample image reconstructions. Clearly, they need a lot of work, but given the network size, they are definately good enough!

Above, you can see some sample image reconstructions. Clearly, they need a lot of work, but given the network size, they are definately good enough!

Variational Autoencoders

Variational Autoencoders (VAE)s use a sampling algorithm to learn the right parameters for generating an image reconstruction. As you can see, the below image is very blury,

GANs

As you can see from above, GANs use both a generator and a discriminator to generate synthetic images from random noise.

They have a lot of potential for applications ranging from image compression, generating augmented data, and the very popular DeepFakes.

As you can see from above, GANs use both a generator and a discriminator to generate synthetic images from random noise.

They have a lot of potential for applications ranging from image compression, generating augmented data, and the very popular DeepFakes.