Why Scrape the Web?

Developers and researchers often scrape the web Because they want to gain insights on what is happening live. You can easily develop a scraping program to parse through many tweets on Twitter. You can also scrape news articles to find trends in what people are talking about. Packages for Python, such as BeautifulSoup can help you gain further insights.

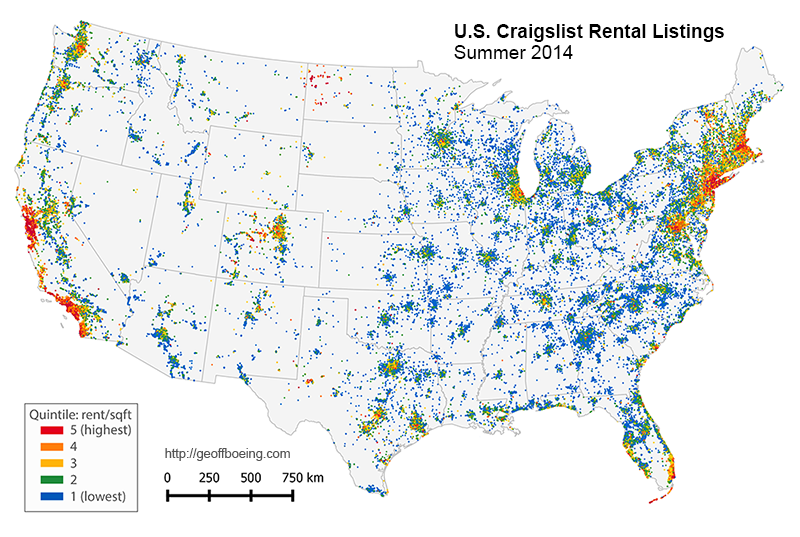

Many big data analytics insights that been made with web scraping. For example, the map below utilized web scraping to generate a map of the the number of orders made on Craigslist.

The structure of websites

Before we develop scraping algorithms, it’s probably important to also reference what we are actually going to scrape. Almost always, this is going to be Hypertext Markup Language (HTML). Below, find a sample of what a small HTML script looks like:

<!DOCTYPE html>

<html>

<body>

<h1>My First Heading</h1>

<p>My first paragraph.</p>

</body>

</html>

There are common patterns that are found in every HTML file, such as <h1> </h1>, <body><\body> , etc. You can think about these markers as being the placeholders for what our web-scraping algorithm will look for.

In the above HTML code, the <h1></h1> marker refers to the header string of the file. This is analogous to the “title” of any sort of article or paragraph.

The BeautifulSoup Library

The BeutifulSoup Library is a package that can be utilized to parse the HTML files that make up every website. BeautifulSoup needs to be imported into your python script before you can utilize its functions to parse through your site of interest.

In order to install this library you can run pip install beautifulsoup4

Great! We can now think of problems to solve that utilize this method. One problem that we can tackle is to perhaps observe trends in reporting related to COVID-19. Knowing these trends frames the conversations related to the pandemic. We can start by scraping a new site like the New York Times and storing a collection of all of the article titles that have keywords like “COVID-19”, “Coronavirus” , etc.

Let’s consider first a simple program that will scrape the New York Times website and then stores all of the article headers.

import requests

from bs4 import BeautifulSoup

story_heading_list = []

base_url = "http://www.nytimes.com"

r = requests.get(base_url)

soup = BeautifulSoup(r.text)

for heading in soup.find_all('h3'):

story_heading_list += [heading.contents[0].strip()]

print(story_heading_list)

['A GLOBAL CRISIS', 'U.S.', 'POLITICS', 'THE MEDICAL PICTURE', 'COMMUNICATING IN A PANDEMIC', 'Opinion', 'Editors’ Picks', 'Advertisement']

This simple program parses through the entire NYTimes website and stores the contents of any HTML heading that is part of the story-heading class. We can then filter out entries in our list and only store titles that contain our keywords related to the COVID-19 pandemic.

keywords = ["covid-19" , "coronavirus" , "covid" , "pandemic"]

# The empty list that we will add the Covid-19 related headers to.

covid_headers_list = []

for heading in story_heading_list:

# We also want to check for the case of the heading.

for word in heading.lower().split():

if word in keywords:

covid_headers_list += [heading]

print(covid_headers_list)

['COMMUNICATING IN A PANDEMIC']

Now that we have it stored, all that’s left to do is print the list of headers! We can even store the list in a .txt file for long term storage and further analytics.

import csv

with open("filesave.txt", 'w', newline='') as myfile:

wr = csv.writer(myfile, quoting=csv.QUOTE_ALL)

wr.writerow(covid_headers_list)